AWS RDS PostgreSQL Audit Logs in DataSunrise

Today it is almost unsurprising to see another headline related to data breaches or data privacy. This affects you personally as a customer of those companies – you want someone to take good care of your data. It also affects you professionally; as a person who works with data, you are that ‘someone’ for your customers. Good audit logging is one important tool in the belt of a security-aware data professional. In this article, we discuss RDS PostgreSQL Audit Logs and how to set them up with DataSunrise.

The general idea of database auditing is to know who and when accessed your database tables, and what modifications were done to them. Theoretically, all these demands can be fulfilled using native database audit mechanisms. This article will also focus on the native auditing of an AWS RDS PostgreSQL database.

There are different parameters that you can set to log activity on your AWS RDS PostgreSQL database. Basic statement logging can be provided by the standard logging facility with log_statement = all. This is acceptable for monitoring and other usages but does not provide the level of detail generally required for an audit. It is not enough to have a list of all the operations performed against the database. It must also be possible to find particular statements that are of interest to an auditor. The standard logging facility shows what the user requested. For more detailed and structured information, you can use the pgaudit extension (pgAudit on GitHub) , which you can also configure. The pgaudit plugin provides detailed session and object audit logging for Amazon RDS PostgreSQL. After you enable the pgAudit extension, you can configure the pgaudit.log parameter to audit specific databases, roles, tables, and columns.

Enabling the pgAudit extension on an AWS RDS instance running PostgreSQL

- Create an RDS Parameter Group and change the default parameter values to the values shown below:

Parameter name Value to set Log_checkpoints (optional) 0 log_connections 1 log_destination csvlog log_disconnections 1 pgaudit.log all pgaudit.role rds_pgaudit shared_preload_libraries pg_stat_statements, pgaudit Assign the Parameter group to your RDS PostgreSQL database instance (RDS Instance → Configuration → Modify → database’s Additional Configuration → DB parameter group);

Connect to your RDS PostgreSQL database using some client (psql, PgAdmin, etc.) and execute the following query to create a database role named rds_pgaudit:

CREATE ROLE rds_pgaudit;

Reboot your RDS Postgres database instance to apply the changes (RDS Instance → Action → Reboot)

Ensure that pgAudit is initialized by executing the following command:

show shared_preload_libraries;

You should receive the following response:

shared_preload_libraries -------------------------- Rdsutils,pg_stat_statements,pgaudit

Create the pgaudit extension by executing the following command:

CREATE EXTENSION pgaudit;

Ensure that the pgaudit.role is set to rds_pgaudit by executing the following command:

SHOW pgaudit.role;

You should receive the following response:

Pgaudit.role ------------------ Rds_pgaudit

You can view database log files for your DB engine by using the AWS Management Console:

- On the Amazon RDS console choose the name of the PostgreSQL DB instance that has the log file that you want to view.

- Choose the Logs & events tab.

- Scroll down to the Logs section.

- In the Logs section, choose the log that you want to view, and then choose View.

To test the audit logging, run several commands that you have chosen to audit. For example, you might run the following command:

INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES (7, 1, 68611, 1557, CURRENT_TIMESTAMP);

The database logs should contain an entry similar to the following.

... 2020-12-08 11:05:14.093 UTC,"postgres","pg_test",9937,"18.222.214.187:45210",5fcf5de8.26d1,99, "INSERT",2020-12-08 11:05:12 UTC,7/1305,5563,LOG,00000,"AUDIT: SESSION,97,1,WRITE,INSERT,,,"" INSERT INTO pgbench_history (tid, bid, aid, delta, mtime) VALUES (7, 1, 68611, 1557, CURRENT_TIMESTAMP); "",<not logged>",,,,,,,,,"" ...

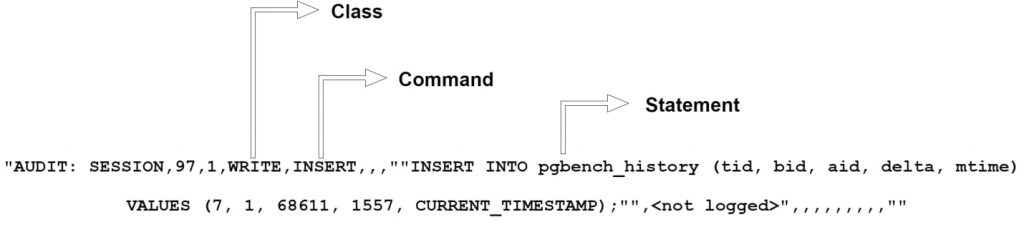

Let’s look at the format of pgAudit logs:

The CLASS categorizes the kind of statement (READ, WRITE, DDL, etc) and COMMAND tells you what subclass it is. The STATEMENT field contains the full text of the INSERT INTO and the fully-qualified name of a particular table.

We need to point out that from the auditor’s perspective such native audit logs are quite difficult to read and analyze. Native auditing needs additional software/resources (like DataSunrise) for parsing and processing in order to produce user-friendly audit trails. Besides that, native auditing means causing overhead on the database server, large audit archives require database storage, and the audit data is not captured in the format required by the auditors and security teams. In other words, auditors need logs presenting information in a meaningful manner and native database mechanisms can’t provide them with the required type of logs.

Here comes DataSunrise

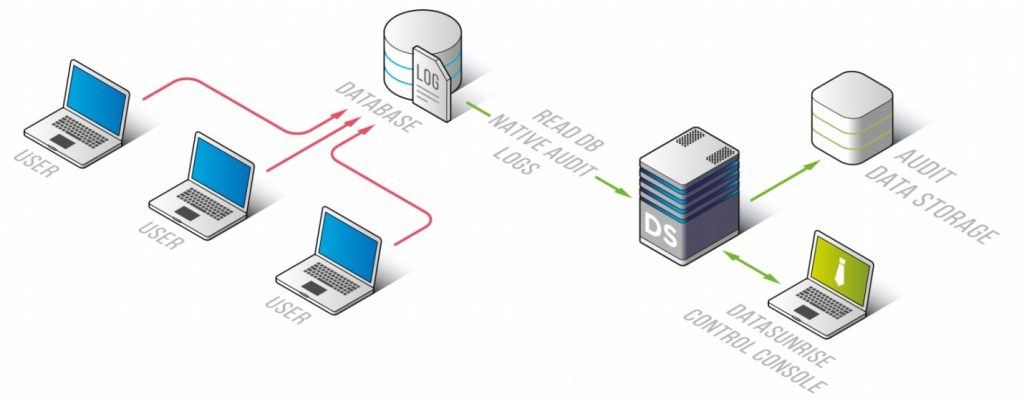

Based on the limitations of native audit mechanisms we mentioned above, let’s take a closer look at DataSunrise’s capabilities. DataSunrise can be deployed in PostgreSQL Trailing DB Audit Logs configurations. This option enables you to get auditing results collected by PostgreSQL native audit tools.

PostgreSQL database performs auditing using its integrated auditing mechanisms and saves auditing results in a dedicated CSV file depending on the selected configuration. Then DataSunrise establishes a connection with the base for more session information, downloads the audit data from the database server using the RDS API, and passes it to the Audit Storage for further analysis. First and foremost, this configuration is intended to be used for Amazon RDS databases because DataSunrise doesn’t support sniffing on RDS. This operation mode has three main drawbacks:

- If the database admin has access to the database logs, he can delete them.

- Native auditing makes a negative impact on database performance.

- It is impossible to find out what data was received for a specific request.

Configuring PostgreSQL Trailing DB Audit Logs

To use Audit Trail for auditing Amazon RDS PostgreSQL database queries You need to do the following:

- Assign proper IAM roles to your EC2 instance with DataSunrise instances:

Create an AWS IAM Policy using the following JSON:

{ "Version": "2012-10-17", "Statement": [ { "Sid": "VisualEditor0", "Effect": "Allow", "Action": [ "rds:DownloadDBLogFilePortion", "rds:DescribeDBLogFiles", "rds:DownloadCompleteDBLogFile" ], "Resource": "arn:aws:rds:<region>:<012345678901>:db:<db-instance-name>" } ] }- Attach the policy to your IAM Role (Policies → Policy actions → Attach)

- Attach the IAM Role to your DataSunrise EC2 machine (EC2 machine → Instance Settings → Attach/Replace IAM Role)

- Connect to your DataSunrise’s Web Console.

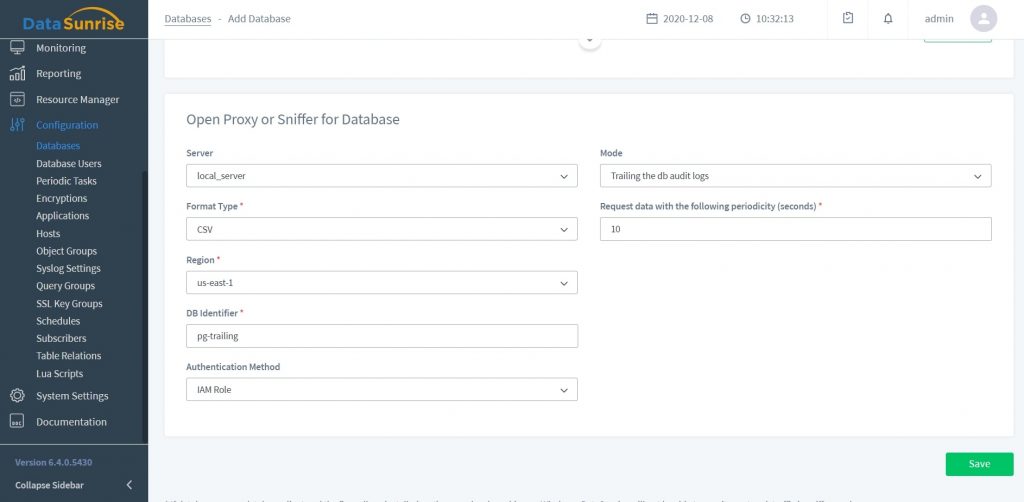

- Create a Database profile in the Configurations → Databases. In the Mode drop-down list, select Trailing the db audit logs and fill out all the required fields:

Interface element Description Server DataSunrise server Format Type Format of the file to store audit data in Region AWS Region your target database is located in DB Identifier Database Instance’s name Authentication method - IAM Role: use the attached IAM role for authentication

- Regular: authentication using AWS Access/Secret Key

Request data with the following periodicity (seconds) 10 by default

- Go to the Audit and configure an Audit Rule for PostgreSQL instance.

- Check that there are no running sessions.

- Connect to PostgreSQL database directly and execute any queries.

- Go to Audit → Transactional Trails to check for auditing results.

Why is DataSunrise useful in managing PostgreSQL Audit Logs?

DataSunrise’s Data Audit component is capable of auditing ALL user actions and queries sent to the target database. Auditing just doesn’t depend on the database user type. Thus it can audit both regular users’ queries and privileged users’ queries.

DataSunrise stores its auditing results in an integrated SQLite database or in an external database such as PostgreSQL, MS SQL Server, Vertica, Redshift, Aurora MySQL, MySQL. DS also allows you to filter DB audit logs, organize them, and generate reports based on them that are uniform for all databases. Thanks to Report Gen advanced reporting component, you can present your audited data as a customizable report suitable for your auditor’s needs. You can also create reports periodically on a schedule.