A Guide to Generative AI Audit

35% of technology companies are adopting generative AI. In today’s digital age, artificial intelligence has become an integral part of our lives. Generative AI, like ChatGPT, offers incredible capabilities but also raises important privacy concerns.

This article looks at Generative AI Audit. It explores the risks users face and how they can protect their personal information.

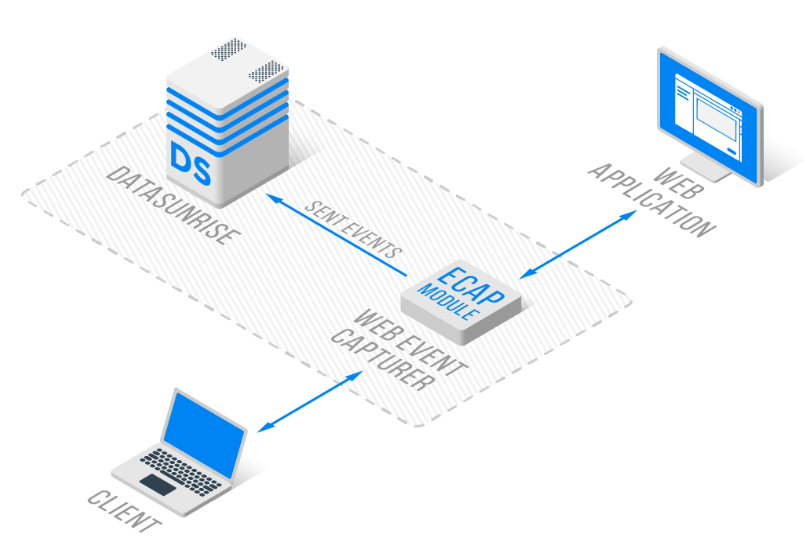

DataSunrise now offers a fifth operation mode: Web-application mode. This advanced mode incorporates cutting-edge technologies to deliver a robust and flexible solution. It seamlessly integrates with our existing user interface while providing uniform security for both ChatGPT and Salesforce applications.

Users can use the same familiar tools they use for regular databases. This ensures a smooth transition and a consistent experience across different data sources.

The Rise of Generative AI and Its Privacy Implications

Generative AI has revolutionized how we interact with technology. However, as users connect to platforms like ChatGPT, they often overlook potential privacy risks. Understanding these risks is crucial for safeguarding personal information in an increasingly AI-driven world.

Privacy Risks When Using ChatGPT

When engaging with ChatGPT, users may unknowingly expose themselves to several privacy risks:

- Data Collection: ChatGPT stores conversation histories, which may contain sensitive information.

- Unintended Information Disclosure: Users might accidentally share personal details during conversations.

- Third-Party Access: There’s potential for unauthorized access to stored data.

- AI Learning from Personal Data: The AI model may incorporate user data into its learning processes.

- Cross-Session Information Linking: Multiple conversations could be linked, revealing patterns about a user.

Protecting Personal Information in ChatGPT Queries

To mitigate privacy risks when using ChatGPT, consider these strategies:

- Minimize Personal Information: Avoid sharing unnecessary personal details in your queries.

- Use Pseudonyms: When possible, use masked data instead of real names.

- Be Cautious with Sensitive Topics: Refrain from discussing highly personal matters.

- Regularly Clear Chat History: Delete your conversation history periodically.

- Review Privacy Policies: Understand how the platform handles and protects user data.

Auditing Chat Sessions and API Usage

Auditing chat sessions and API usage is crucial for maintaining privacy and security. Here’s how to approach it:

For Individual Users:

Regularly reviewing your conversation logs is a crucial step in maintaining privacy when using generative AI tools. Take some time to review your chat history regularly. Look for any sensitive information you may have shared by mistake.

This practice helps you spot possible privacy risks. It also makes you more aware of the information you share with AI systems. If you spot any concerning data, consider deleting those specific messages or clearing your entire chat history if necessary.

For those utilizing the API, monitoring your API key usage is essential. Keep track of when and how you’re using your key to ensure it hasn’t fallen into unauthorized hands. This vigilance can help you detect any unusual activity or potential security breaches early on.

Think about setting up alerts for unusual usage patterns. You can also use rotation policies for your API keys to improve security.

Lastly, consider using privacy-focused browsers when interacting with generative AI tools online. These browsers help limit data collection while you browse the web. They add extra protection for your personal information. They often come with built-in features like tracker blocking, fingerprinting protection, and automatic private browsing modes.

Using these browsers can help reduce the data collected about your AI interactions. This can protect your privacy online, even if it is not a complete solution.

For Organizations:

- Implement Access Controls: Restrict who can use ChatGPT or its API within the organization.

- Set Up Logging Systems: Create robust logs of all AI interactions for review.

- Conduct Regular Audits: Periodically review chat logs and API usage for potential security issues.

- Train Employees: Educate staff on best practices for using generative AI tools.

The Role of Specialized Audit Tools

Specialized tools can significantly enhance the auditing process. They offer features like:

- Automated Log Analysis: Quickly identify potential privacy breaches.

- Real-Time Monitoring: Track AI interactions as they happen.

- Customizable Alerts: Set up notifications for specific types of data usage.

- Compliance Reporting: Generate reports to meet regulatory requirements.

DataSunrise: A Comprehensive Solution for ChatGPT Auditing

DataSunrise offers powerful ChatGPT Audit capabilities, storing audit data in a standardized format. Their solution uses proxy technology to audit GPT sessions comprehensively. The web application mode setup, based on a Docker compose file, ensures easy and reliable implementation.

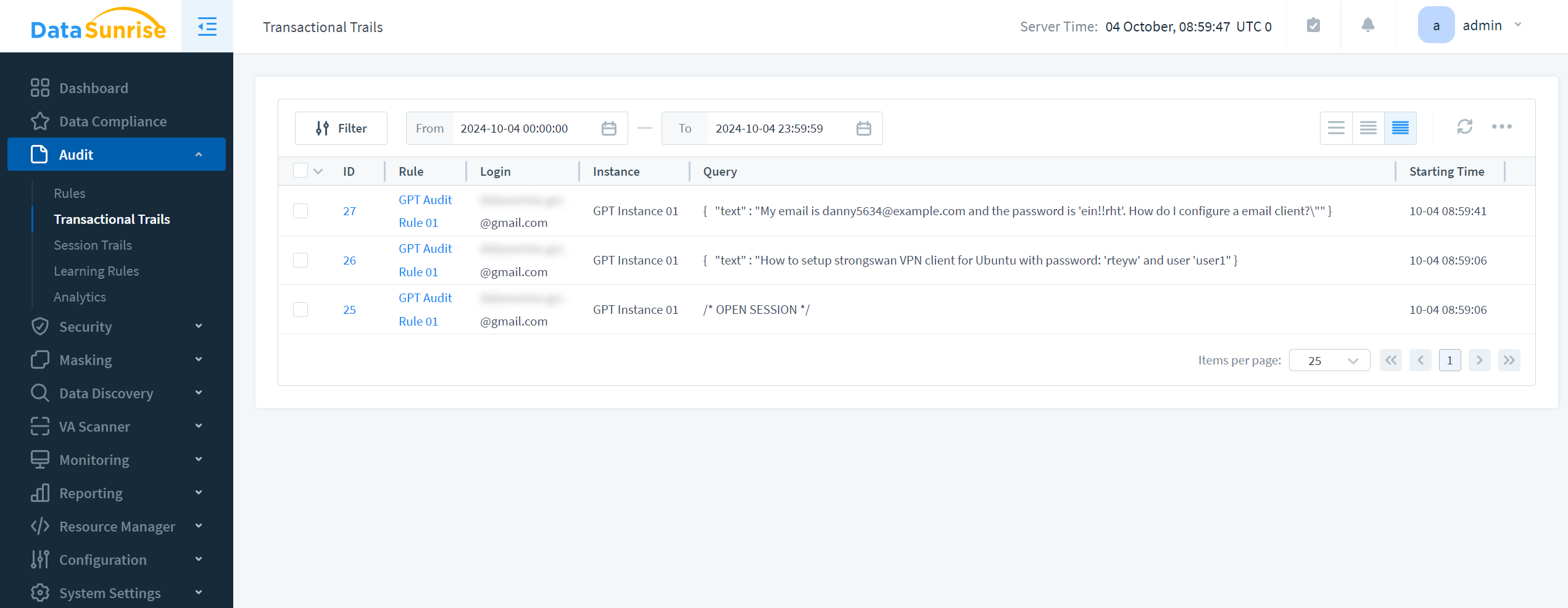

Our standard user interface works seamlessly across all transactional trails. No matter what data you are auditing, you will find the same tools. These tools help you track transactions, create instances, and generate reports. This consistent approach simplifies your workflow, whether you’re dealing with database operations, ChatGPT interactions, or Salesforce activities.

Conclusion: Balancing Innovation and Privacy

As generative AI continues to evolve, so too must our approaches to privacy and security. We can use AI safely by understanding the risks. We should use protective measures and strong auditing tools. This will help us protect our personal information.

Regular audits, user training, and strong tools like those from DataSunrise help protect against privacy breaches in generative AI. These elements work together to identify vulnerabilities, promote responsible AI use, and enable swift responses to potential issues. By using proactive monitoring, informed users, and advanced technologies, organizations can balance AI innovation with privacy protection. This approach helps create a safer and more secure future for AI.

DataSunrise provides flexible and cutting-edge tools for database security, including comprehensive audit and compliance solutions. These tools are part of a broader suite of features designed to protect sensitive data across various platforms. To see how DataSunrise can improve your organization’s data security, visit our website. You can try our online demo there.