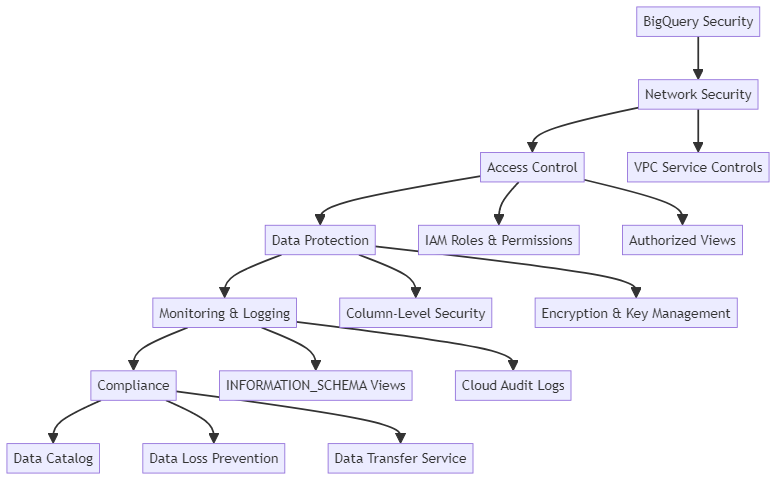

BigQuery Security Measures: Encryption, Access Control, and Compliance

Google BigQuery offers powerful data warehousing capabilities, but with great power comes great responsibility. Securing your BigQuery environment is crucial to protect sensitive information and maintain compliance with data regulations. This article will explore various aspects of BigQuery security, from network access controls to fine-grained permissions and monitoring.

Understanding VPC Service Controls

VPC Service Controls act as a virtual firewall for your BigQuery resources. You can protect your data in BigQuery by choosing which networks and IP addresses are allowed to access it. This adds an extra layer of protection against unauthorized access attempts.

To set up VPC Service Controls for BigQuery, you’ll need to create an access level policy in your Google Cloud Console. This policy defines the IP ranges allowed to interact with your BigQuery resources. Once the policy is in place, you can create a service perimeter that includes BigQuery as a restricted service.

For example, you might create a policy that only allows access from your corporate network’s IP range. This ensures that BigQuery queries can only be executed from within your organization’s network, reducing the risk of external threats.

Implementing VPC Service Controls requires careful planning. You need to consider different ways to access BigQuery, such as on-site networks, cloud VPNs, and other Google Cloud projects.

These methods provide various options for connecting to BigQuery. You should explore all the available options to determine the best approach for your needs. It’s often helpful to start with a dry-run perimeter to test your configuration before enforcing it.

Implementing IAM Roles and Permissions

Identity and Access Management (IAM) is the backbone of BigQuery security. It allows you to control who has access to your BigQuery resources and what actions they can perform. The most powerful role in BigQuery is roles/bigquery.admin, which grants full control over all BigQuery resources in a project.

However, it’s generally better to follow the principle of least privilege and assign more specific roles. For instance, you might give data analysts the bigquery.user role, which allows them to run queries and create datasets, but not modify existing dataset permissions.

Here’s an example of how you might use the BigQuery CLI to grant a user the bigquery.user role:

bq add-iam-policy-binding --member=user:analyst@example.com --role=roles/bigquery.user project-id

This command adds the specified user to the project with the bigquery.user role.

Regularly auditing your IAM policies is important to ensure they remain appropriate. As employees change roles or leave the organization, their permissions should be updated or revoked accordingly. You can use the IAM recommender in Google Cloud to identify and remove overly permissive roles.

Creating and Securing BigQuery Views

BigQuery views are a powerful tool for implementing row-level and column-level security. You can use virtual tables to filter or change data before showing it to users.

To create a view in BigQuery, you can use the following SQL syntax:

CREATE VIEW `project.dataset.view_name` AS SELECT column1, column2 FROM `project.dataset.table_name` WHERE condition;

For example, you might create a view that only shows sales data for a specific region:

CREATE VIEW `sales.northeast_sales` AS SELECT * FROM `sales.all_sales` WHERE region = 'Northeast';

Grant users access to a specific view instead of the table, so they only see data related to their role.

You can also use views to implement more complex security rules. For instance, you could create a view that only shows data for the current user:

CREATE VIEW `project.dataset.my_data` AS SELECT * FROM `project.dataset.all_data` WHERE user_email = SESSION_USER();

This view will automatically filter the data based on the email of the user running the query.

Authorized Views for Cross-Dataset Access

Authorized views in BigQuery allow you to create views in one dataset. These views can access data in another dataset. The system grants access even if the user does not have permission to view the original dataset. This is particularly useful for implementing fine-grained access controls.

To set up an authorized view, you first create the view in one dataset, then grant that view access to the source dataset. Here’s an example:

-- Create the view in dataset A CREATE VIEW `projectA.datasetA.sales_summary` AS SELECT date, SUM(amount) as total_sales FROM `projectB.datasetB.detailed_sales` GROUP BY date; -- Authorize the view to access data in dataset B bq add-iam-policy-binding \ --member=serviceAccount:service-PROJECT_NUMBER@gcp-sa-bigquery.iam.gserviceaccount.com \ --role=roles/bigquery.dataViewer \ projectB:datasetB

This creates a summary view in dataset A that can access detailed sales data in dataset B, without giving users direct access to the detailed data.

Powerful authorized views should be used judiciously. Every time you give someone permission to view something, it makes your security system more complicated. Make sure to keep track of these permissions and check them often.

Implementing Column-Level Security

Column-level security in BigQuery allows you to restrict access to specific columns within a table. This is particularly useful when dealing with sensitive information like personal identifiable information.

To implement column-level security, you can use BigQuery’s policy tags feature. First, you create a taxonomy of policy tags, then apply these tags to specific columns. Finally, you grant users or groups access to specific policy tags.

Here’s an example of how you might create a policy tag using the BigQuery Data Policy API:

POST https://datacatalog.googleapis.com/v1/projects/{project}/locations/{location}/taxonomies

{

"displayName": "Sensitive Data",

"description": "Tags for sensitive data columns",

"activatedPolicyTypes": ["FINE_GRAINED_ACCESS_CONTROL"]

}

You can use categories and labels that you create on columns in your BigQuery setup. You can also control access with IAM rules.

Column-level security can significantly improve your data protection, but it also adds complexity to your data model. It’s important to have a clear strategy for which columns need protection and how access to these columns will be managed.

Monitoring and Logging in BigQuery

Effective security isn’t just about prevention; it’s also about detection and response. BigQuery provides robust logging and monitoring capabilities to help you track usage and identify potential security issues.

You can use BigQuery’s INFORMATION_SCHEMA views to query metadata about your BigQuery resources. For example, to see all queries run in the last day, you might use:

SELECT * FROM `region-us`.INFORMATION_SCHEMA.JOBS_BY_PROJECT WHERE creation_time == TIMESTAMP_SUB(CURRENT_TIMESTAMP(), INTERVAL 1 DAY) AND job_type = 'QUERY' ORDER BY creation_time DESC;

This query gives specific details about every job. It includes the user who executed the job, the query text used, and the volume of data processed.

In addition to INFORMATION_SCHEMA views, you can also use Cloud Audit Logs to track BigQuery activity. Cloud Audit Logs capture a variety of events, including dataset creation and deletion, table updates, and query executions. You can export these logs to Cloud Storage or BigQuery for long-term retention.

Implementing GCP Org Policies

GCP Organization Policies provide a centralized way to manage security controls across your entire Google Cloud organization. You can use these rules to make sure BigQuery is secure, like making sure all tables have an encryption key.

To set up an org policy, you use the GCP Console or the gcloud command-line tool. For example, to require all BigQuery datasets to be region-restricted:

gcloud resource-manager org-policies enable-enforce \ constraints/bigquery.restrictDatasetLocation \ --organization=ORGANIZATION_ID

This rule ensures that all new datasets have a listed location. It prevents accidental creation of datasets that span multiple regions. It also prevents breaking data residency rules.

Org policies can be a powerful tool for enforcing consistent security practices across your organization. However, organizations should implement them carefully, as overly restrictive policies can hinder legitimate work. It’s often helpful to start with audit-only policies before enforcing them.

Handling Permission Denied Errors

Even with strong security, users may still get “permission denied” errors when trying to access BigQuery resources. One common error is “permission bigquery.datasets.update denied on dataset”.

This error often occurs when a user tries to modify a dataset they don’t have sufficient permissions for. To resolve this, you need to grant the user the bigquery.dataEditor role (or a custom role with equivalent permissions) on the dataset.

You can do this using the bq command-line tool:

bq add-iam-policy-binding \ --member=user:username@example.com \ --role=roles/bigquery.dataEditor \ project:dataset

Only give users or service accounts the least amount of permissions needed to follow the principle of least privilege.

When troubleshooting permission issues, it’s often helpful to use the IAM Policy Troubleshooter in the Google Cloud Console. This tool can help you understand why a user does or doesn’t have a particular permission.

Advanced BigQuery Security Techniques

For more complex security requirements, BigQuery offers several advanced features. One such feature is the ability to use user-defined functions (UDFs) to implement dynamic data masking.

For example, you could create a UDF that masks email addresses:

CREATE FUNCTION `project.dataset.mask_email`(email STRING) RETURNS STRING AS ( CASE WHEN email IS NULL THEN NULL ELSE CONCAT(LEFT(email, 1), '***@', SPLIT(email, '@')[OFFSET(1)]) END );

You can then use this function in views or queries to automatically mask email addresses for users who shouldn’t see the full values.

Another advanced technique is using BigQuery’s GROUP BY ALL feature for aggregate data access. This feature allows you to create summary views that group data by non-aggregated columns. This simplifies access to aggregated data without showing individual records.

CREATE VIEW `project.dataset.sales_summary` AS SELECT DATE_TRUNC(date, MONTH) as month, SUM(amount) as total_sales FROM `project.dataset.detailed_sales` GROUP BY ALL;

This view will automatically display any new columns added to the detailed_sales table. This feature makes it easier to manage the table in the future.

Encryption and Key Management

BigQuery automatically encrypts all data at rest, but for additional security, you can use customer-managed encryption keys (CMEK). With CMEK, you manage your own encryption keys using Cloud Key Management Service (KMS).

To use CMEK with BigQuery, you first create a key ring and key in KMS, then specify this key when creating a dataset:

bq mk --dataset \ --default_kms_key projects/[KEY_PROJECT_ID]/locations/[LOCATION]/keyRings/[KEYRING_NAME]/cryptoKeys/[KEY_NAME] \ [PROJECT_ID]:[DATASET]

Using CMEK gives you more control over your data encryption, but it also comes with additional management responsibilities. You’ll need to ensure that your keys are properly secured and that you have processes in place for key rotation and recovery.

Data Governance and Compliance

Effective data governance is crucial for maintaining compliance with regulations like GDPR, HIPAA, and CCPA. BigQuery provides several features to support data governance:

- Data Catalog: This fully managed, scalable metadata management service can help you discover, understand, and manage your BigQuery datasets.

- Data Loss Prevention (DLP): You can use Cloud DLP to scan your BigQuery tables for sensitive information and automatically apply appropriate controls.

- BigQuery Data Transfer Service: This service helps you set up and manage regular data loads from various sources. It ensures that your data stays current and accurate.

When implementing data governance in BigQuery, it’s important to consider the entire data lifecycle, from ingestion to deletion. You should have clear policies in place for data retention, access control, and data quality management.

Conclusion

Securing BigQuery requires multiple layers of security, including network controls and permissions with IAM roles and authorized views. By using policy tags and monitoring/logging, you can make your BigQuery environment more secure.

Remember that security is an ongoing process. Regularly review your security settings, monitor for unusual activity, and stay updated on the latest BigQuery security features to ensure you protect your data. By using the correct methods, you can make the most of BigQuery while also ensuring data security and compliance.

As your BigQuery usage grows, consider implementing automated security checks and compliance audits. Tools like Cloud Security Command Center can help you see how secure your Google Cloud environment is, including BigQuery.

Finally, don’t forget the human element of security. Regular training for your team on BigQuery security best practices and your company’s specific policies is crucial. Encouraging a culture of security awareness helps everyone keep data safe.