Data-Driven Testing

Introduction

According to the TIOBE Index, which tracks the popularity of programming languages, there were approximately 250 programming languages in use in 2004. By 2024, this number had grown to over 700 active programming languages. In the ever-evolving world of software development, ensuring the quality and reliability of applications is paramount. Data-driven testing has emerged as a powerful approach to achieve this goal.

By separating test data from test scripts, it allows for more efficient and comprehensive software testing. This article delves into the fundamentals of this testing, exploring its benefits, implementation strategies, and best practices.

What is Data-Driven Testing?

Data-driven testing is a method in software testing where the same test is run multiple times with different data. This approach separates test data from test logic, allowing testers to create more flexible and maintainable test suites.

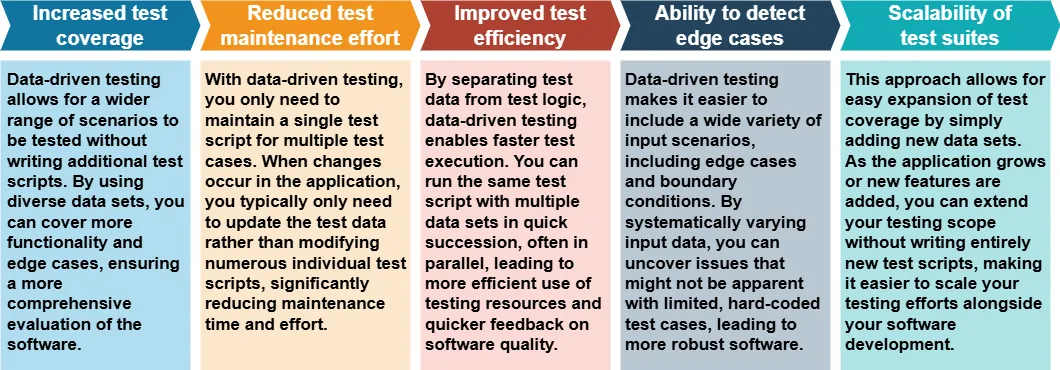

Key Benefits

The Role of Test Data in Data-Driven Testing

Test data plays a crucial role in the success of data-driven testing. High-quality test data ensures that your tests cover a wide range of scenarios, including both typical and edge cases.

Types of Test Data

- Real data: Actual production data (anonymized for privacy)

- Synthetic data: Artificially generated data

- Masked data: Modified real data to protect sensitive information

Implementing Data-Driven Testing

To implement testing with large dataset effectively, follow these steps:

- Identify test scenarios

- Design test cases

- Prepare test data

- Create parameterized test scripts

- Execute tests with multiple data sets

- Analyze results

Example: Data-Driven Testing with Selenium WebDriver

Let’s consider a simple example of data-driven testing using Selenium WebDriver and Python:

import csv

from selenium import webdriver

from selenium.webdriver.common.by import By

def login_test(username, password, expected_result):

driver = webdriver.Chrome()

driver.get("https://example.com/login")

driver.find_element(By.ID, "username").send_keys(username)

driver.find_element(By.ID, "password").send_keys(password)

driver.find_element(By.ID, "login-button").click()

actual_result = "success" if "Welcome" in driver.title else "failure"

assert actual_result == expected_result, f"Test failed for {username}"

driver.quit()

# Read test data from CSV file

with open('login_test_data.csv', 'r') as file:

reader = csv.reader(file)

next(reader) # Skip header row

for row in reader:

username, password, expected_result = row

login_test(username, password, expected_result)In this example, we’ve created a parameterized test script that reads test data from a CSV file. Each row in the file contains a username, password, and expected result. The script runs the login test for each set of credentials, verifying the outcome against the expected result.

Synthetic Data in Data-Driven Testing

Synthetic data is artificially generated data that mimics the characteristics of real data. It’s particularly useful in data-driven testing when real data is unavailable or when additional test scenarios are needed.

Benefits of Synthetic Data

- Increased test coverage

- Protection of sensitive information

- Ability to generate large volumes of data quickly

- Creation of edge cases and rare scenarios

Generating Synthetic Data

There are various tools and techniques for generating synthetic data:

- Random data generation

- Model-based data generation

- AI-powered synthetic data generation

At DataSunrise, we’ve implemented all these techniques, positioning our solution at the forefront of the market. We leverage machine learning libraries for sophisticated synthetic data generation and employ cutting-edge AI-driven tools for precise data masking and efficient discovery. This combination of technologies ensures that DataSunrise offers unparalleled capabilities in data protection and test data management.

Example of generating synthetic user data using Python:

import random

import string

def generate_user_data(num_users):

users = []

for _ in range(num_users):

username = ''.join(random.choices(string.ascii_lowercase, k=8))

password = ''.join(random.choices(string.ascii_letters + string.digits, k=12))

email = f"{username}@example.com"

users.append((username, password, email))

return users

# Generate 100 synthetic user records

synthetic_users = generate_user_data(100)This script generates random usernames, passwords, and email addresses for testing purposes.

Masked Data in Data-Driven Testing

Masked data is real data that has been modified to protect sensitive information while maintaining its statistical properties and relationships. It’s an essential technique in data-driven testing when working with production data.

Benefits of Data Masking

- Protection of sensitive information

- Compliance with data privacy regulations

- Realistic test data that reflects production scenarios

- Reduced risk of data breaches during testing

Data Masking Techniques

- Substitution

- Shuffling

- Encryption

- Nulling out

Example of a simple data masking function in Python:

import hashlib

def mask_email(email):

username, domain = email.split('@')

masked_username = hashlib.md5(username.encode()).hexdigest()[:8]

return f"{masked_username}@{domain}"

# Example usage

original_email = "john.doe@example.com"

masked_email = mask_email(original_email)

print(f"Original: {original_email}")

print(f"Masked: {masked_email}")This function masks the username part of an email address using a hash function, preserving the domain for realistic testing.

Best Practices for Data-Driven Testing

To make the most of data-driven testing or performance testing, consider these best practices:

- Maintain a diverse set of test data

- Regularly update and refresh test data

- Use version control for test data management

- Implement data validation checks

- Automate data generation and masking processes (save time)

- Document data dependencies and relationships

Data Validation Testing

Data validation testing is a crucial aspect of data-driven testing. It ensures that the application handles various input data correctly, including valid, invalid, and edge cases.

Types of Data Validation Tests

- Boundary value analysis

- Equivalence partitioning

- Error guessing

- Combinatorial testing

Example of a data validation test for a user registration form:

import pytest

def validate_username(username):

if len(username) < 3 or len(username) > 20:

return False

if not username.isalnum():

return False

return True

@pytest.mark.parametrize("username, expected", [

("user123", True),

("ab", False),

("verylongusernameoverflow", False),

("valid_user", False),

("validuser!", False),

])

def test_username_validation(username, expected):

assert validate_username(username) == expectedThis test uses pytest to validate usernames against various criteria, including length and allowed characters.

Challenges in Data-Driven Testing

While this type of testing offers numerous benefits, it also comes with challenges:

- Data management complexity

- Ensuring data quality and relevance

- Handling large volumes of test data

- Maintaining data privacy and security

- Interpreting test results across multiple data sets

Tools for Data-Driven Testing

Several tools can facilitate testing:

Selenium WebDriver: A popular open-source tool for automating web browsers. It supports multiple programming languages and allows testers to create robust, browser-based regression automation suites and tests.

JUnit: A unit testing framework for Java that supports the creation and running of automated tests. It provides annotations to identify test methods and includes assertions for testing expected results.

TestNG: An advanced testing framework inspired by JUnit but with additional functionalities. It supports parallel execution, and flexible test configuration.

Cucumber: A behavior-driven development (BDD) tool that allows you to write test cases in plain language. It supports data-driven testing through the use of scenario outlines and examples tables.

Apache JMeter: An open-source load testing tool that can be used for data-driven testing of web applications. It allows you to create test plans with various samplers and assertions, supporting CSV data sets for parameterization.

Summary and Conclusion

Data-driven testing is a powerful approach to software testing that separates test logic from test data. Testers can improve their test suites by using different types of test data, such as synthetic and masked data. This methodology enables better test coverage, improved maintenance, and enhanced ability to detect edge cases.

Software systems are becoming more complex. It is important to use testing to ensure that the software functions properly and is reliable. Development teams can use best practices and tools for data-driven testing. This helps them create high-quality software products.

DataSunrise provides easy-to-use tools for database security, including synthetic data generation and data masking. It is great for organizations wanting to use testing strategies based on data. These tools can significantly enhance your data-driven testing efforts while ensuring data privacy and compliance.

To explore how DataSunrise can support your testing needs, we invite you to visit our website for an online demo. Experience firsthand how our solutions can streamline your testing processes and improve overall software quality.