Database Logging: Best Practices and Solutions

Introduction

A study by NewVantage Partners found that 91.9% of leading businesses report ongoing investments in data and AI initiatives. In today’s data-driven world, effective logging is crucial for maintaining the health, security, and performance of database systems. But have you ever wondered about the best practices for storing these logs? This article delves into the intricacies of database logging, exploring the most suitable storage solutions and best practices to ensure your logging strategy is both efficient and informative.

What is Database Logging?

Database logging is the process of recording events, actions, and changes within a database system. It’s like keeping a detailed diary of everything that happens in your database, from user actions to system processes.

The Specifics of Database Operation Logging

Why Log Database Operations?

Logging database operations serves several critical purposes:

- Troubleshooting: Logs help identify and resolve issues quickly.

- Data Security: They provide an audit trail for detecting unauthorized access or suspicious activities.

- Performance optimization: By analyzing logs, you can identify bottlenecks and improve query performance.

- Compliance: Many industries require detailed audit records for regulatory compliance.

What to Log?

When it comes to database operation logging, consider recording the following:

- Query execution times

- User actions (logins, logouts, failed attempts)

- Schema changes

- Data modifications (inserts, updates, deletes)

- Backup and recovery operations

- Error messages and exceptions

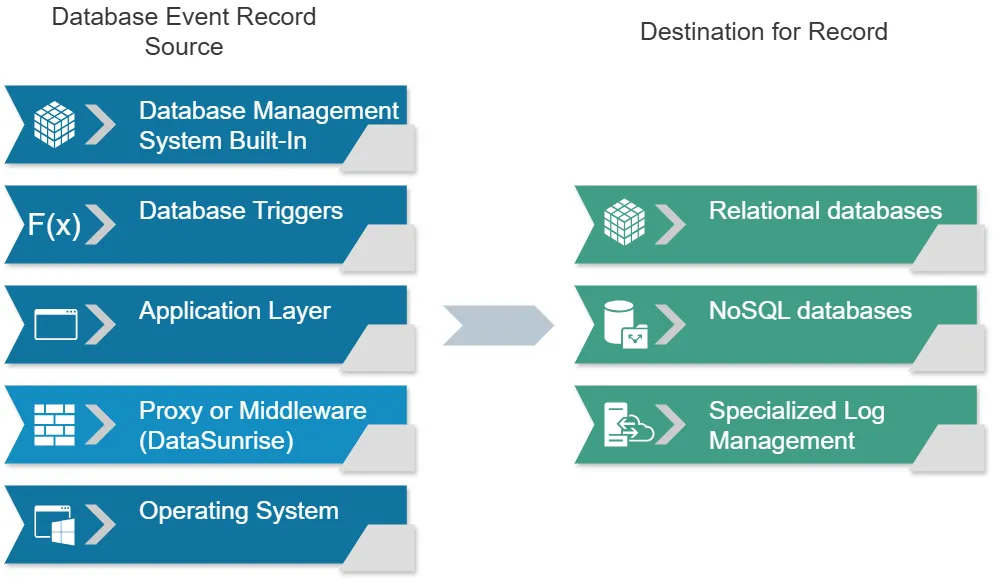

Data Sources for Logging

Before we dive into storage solutions, it’s important to understand where log data comes from. In database systems, log data is typically generated from several sources:

Database Management System (DBMS)

Most database systems have built-in logging mechanisms that capture various events and operations.

- Example: PostgreSQL’s log_destination directory contains log files generated by the database server.

Database Triggers

Custom triggers can be set up to log specific events or data changes.

- Example: A trigger that logs all updates to a sensitive table.

Application Layer

The application interacting with the database can generate logs about database operations it performs.

- Example: A Java application using JDBC to log SQL queries before execution.

Proxy or Middleware

Database proxies or middleware can intercept and log database traffic.

- Example: PgBouncer can be configured to log connection requests and queries.

Operating System

System-level tools can capture database activity at the OS level.

- Example: Linux’s strace command can log system calls made by the database process.

These sources generate the raw log data that needs to be stored and analyzed.

Storages for Logging

Now, let’s explore the various storage options for this log data.

1. Relational Databases

Relational databases like PostgreSQL or MySQL can be used for logging. They offer:

- Structured data storage

- Powerful querying capabilities

- ACID compliance

Example:

CREATE TABLE operation_logs (

id SERIAL PRIMARY KEY,

operation_type VARCHAR(50),

user_id INT,

query_text TEXT,

execution_time FLOAT,

timestamp TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);

INSERT INTO operation_logs (operation_type, user_id, query_text, execution_time)

VALUES ('SELECT', 1, 'SELECT * FROM users WHERE id = 5', 0.023);Result: This creates a table for storing operation logs and inserts a sample log entry.

DataSunrise employs SQLite as its default logging database (also known as the dictionary database) to store all specified events and rules. Users have the option to change this database during the installation process. When deployed in cloud infrastructure, DataSunrise also offers appropriate options tailored for such environments.

2. NoSQL Databases

NoSQL databases like MongoDB or Cassandra are excellent for handling large volumes of unstructured log data. They offer:

- Scalability

- Flexibility in data schema

- High write throughput

Example (MongoDB):

db.operationLogs.insertOne({

operationType: "UPDATE",

userId: 2,

queryText: "UPDATE products SET price = 19.99 WHERE id = 100",

executionTime: 0.015,

timestamp: new Date()

});Result: This inserts a log entry into a MongoDB collection.

3. Specialized Log Management Systems

Tools like Elasticsearch, Splunk, or Graylog are designed specifically for log management. They provide:

- Powerful search and analysis capabilities

- Real-time monitoring and alerting

- Visualization tools

Example (Elasticsearch):

POST /operation_logs/_doc

{

"operation_type": "DELETE",

"user_id": 3,

"query_text": "DELETE FROM orders WHERE status = 'cancelled'",

"execution_time": 0.045,

"@timestamp": "2024-07-03T12:34:56Z"

}Result: This adds a log entry to an Elasticsearch index.

Separating Logging from Main Data Storage

The Case for Separation

Using the same database for both data storage and logging isn’t always the best approach. Here’s why:

- Performance: Logging operations can impact the performance of your main database.

- Security: Keeping logs separate adds an extra layer of security.

- Scalability: Log data can grow rapidly, potentially affecting your main database’s storage capacity.

When to Consider Unified Storage

However, in some cases, using the same database might be beneficial:

- Small-scale applications with low traffic

- When simplicity in setup and maintenance is a priority

- For specific audit requirements where logs need to be tightly coupled with data

Best Practices for Database Logging

- Use structured logging formats (e.g., JSON) for easier parsing and analysis.

- Implement log rotation to manage file sizes and storage.

- Set appropriate log levels to balance between verbosity and performance.

- Encrypt sensitive log data to enhance security.

- Regularly review and analyze logs for insights and anomalies.

Compliance and Auditing

Database logging plays a crucial role in meeting compliance requirements and facilitating audits. Key considerations include:

- Retention policies: Ensure logs are kept for the required duration.

- Access controls: Limit who can view or modify log data.

- Tamper-evident logging: Implement mechanisms to detect log tampering.

Performance Considerations

While logging is essential, it’s important to minimize its impact on database performance:

- Asynchronous logging: Write logs asynchronously to reduce latency.

- Batching: Group multiple log entries before writing to storage.

- Sampling: For high-volume systems, consider logging only a sample of events.

Example of asynchronous logging in Python:

import threading

import queue

log_queue = queue.Queue()

def log_writer():

while True:

log_entry = log_queue.get()

if log_entry is None:

break

# Write log_entry to storage

print(f"Writing log: {log_entry}")

writer_thread = threading.Thread(target=log_writer)

writer_thread.start()

# In your main application

log_queue.put("User 123 logged in")

log_queue.put("Query executed: SELECT * FROM users")

# When shutting down

log_queue.put(None)

writer_thread.join()Result: This creates a separate thread for writing logs, allowing the main application to continue without waiting for log writes to complete.

Scalability and High Availability

As your system grows, consider these strategies for scaling your logging infrastructure:

- Distributed logging: Use a cluster of log servers to handle high volumes.

- Load balancing: Distribute log writes across multiple nodes.

- Replication: Maintain copies of logs for redundancy and fault tolerance.

Tools and Technologies

Several tools can enhance your database logging strategy:

- Logstash: For collecting, processing, and forwarding logs

- Kibana: For visualizing and analyzing log data

- Fluentd: An open-source data collector for unified logging

Security Considerations

Protect your logs with these security measures:

- Encryption: Both in transit and at rest

- Access controls: Implement role-based access to log data

- Monitoring: Set up alerts for suspicious log access or modifications

Summary and Conclusion

Effective database logging is a cornerstone of robust database management. By choosing the right storage solution, implementing best practices, and leveraging appropriate tools, you can create a logging system that enhances security, aids troubleshooting, and provides valuable insights into your database operations.

Remember, the key to successful database logging lies in striking the right balance between comprehensive data capture and system performance. Regular review and optimization of your logging strategy will ensure it continues to meet your evolving needs.

For user-friendly and flexible tools for database audit, masking, and compliance, consider exploring DataSunrise’s offerings. Visit our website at DataSunrise.com for an online demo and discover how we can enhance your database security and logging capabilities.