Generative AI Audit

In the rapidly evolving landscape of artificial intelligence, generative AI has become a cornerstone of innovation. From OpenAI’s ChatGPT to Amazon’s Bedrock and other emerging platforms, these technologies are reshaping how we interact with machines. However, this revolution brings significant privacy concerns, especially regarding the handling of Personal Identifiable Information (PII).

This article looks at the wider effects of generative AI audits. It discusses possible privacy risks and ways to improve auditing and security.

The Expanding World of Generative AI Audit

Generative AI has transcended beyond a single platform. Today, we see a diverse ecosystem:

- OpenAI’s ChatGPT: A conversational AI that has become synonymous with generative capabilities.

- Amazon Bedrock: A fully managed service allowing easy integration of foundation models into applications.

- Google’s Bard: An experimental conversational AI service powered by LaMDA.

- Microsoft’s Azure OpenAI Service: Providing access to OpenAI’s models with the added security and enterprise features of Azure.

These platforms provide API access for developers and web-based interfaces for users. This greatly increases the risk of data breaches.

Privacy Risks in the Generative AI Landscape

The widespread adoption of generative AI introduces several privacy concerns:

- Data Retention: AI models may store inputs for improvement, potentially including sensitive information.

- Unintended Information Disclosure: Users might accidentally reveal PII during interactions.

- Model Exploitation: Sophisticated attacks could potentially extract training data from models.

- Cross-Platform Data Aggregation: Using multiple AI services could lead to comprehensive user profiles.

- API Vulnerabilities: Insecure API implementations might expose user data.

General Strategies for Mitigating Privacy Risks

To address these concerns, organizations should consider the following approaches:

- Data Minimization: Limit the amount of personal data processed by AI systems.

- Anonymization and Pseudonymization: Transform data to remove or obscure identifying information.

- Encryption: Implement strong encryption for data in transit and at rest.

- Access Controls: Strictly manage who can access AI systems and stored data.

- Regular Security Audits: Conduct thorough reviews of AI systems and their data handling practices.

- User Education: Inform users about the risks and best practices when interacting with AI.

- Compliance Frameworks: Align AI usage with regulations like GDPR, CCPA, and industry-specific standards.

Auditing Generative AI Interactions: Key Aspects

Effective auditing is crucial for maintaining security and compliance. Key aspects include:

- Comprehensive Logging: Record all interactions, including user inputs and AI responses.

- Real-time Monitoring: Implement systems to detect and alert on potential privacy breaches immediately.

- Pattern Analysis: Use machine learning to identify unusual usage patterns that might indicate misuse.

- Periodic Reviews: Regularly examine logs and usage patterns to ensure compliance and identify potential risks.

- Third-party Audits: Engage external experts to provide unbiased assessments of your AI usage and security measures.

DataSunrise: A Comprehensive Solution for AI Auditing

DataSunrise offers a robust solution for auditing generative AI interactions across various platforms. Our system integrates seamlessly with different AI services, providing a unified approach to security and compliance.

Key Components of DataSunrise’s AI Audit Solution:

- Proxy Service: Intercepts and analyzes traffic between users and AI platforms.

- Data Discovery: Automatically identifies and classifies sensitive information in AI interactions.

- Real-time Monitoring: Provides immediate alerts on potential privacy violations.

- Audit Logging: Creates detailed, tamper-proof logs of all AI interactions.

- Compliance Reporting: Generates reports tailored to various regulatory requirements.

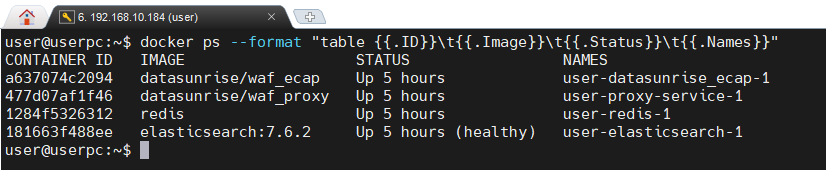

The image below shows four Docker containers running. These containers are providing DataSunrise Web Application Firewall functionality, enhancing the security of the depicted system.

Example Setup with DataSunrise

A typical DataSunrise deployment for AI auditing might include:

- DataSunrise Proxy: Deployed as a reverse proxy in front of AI services.

- Redis: For caching and session management, improving performance.

- Elasticsearch: For efficient storage and retrieval of audit logs.

- Kibana: For visualizing audit data and creating custom dashboards.

- DataSunrise Management Console: For configuring policies and viewing reports.

This setup can be easily deployed using container orchestration tools like Docker and Kubernetes, ensuring scalability and ease of management.

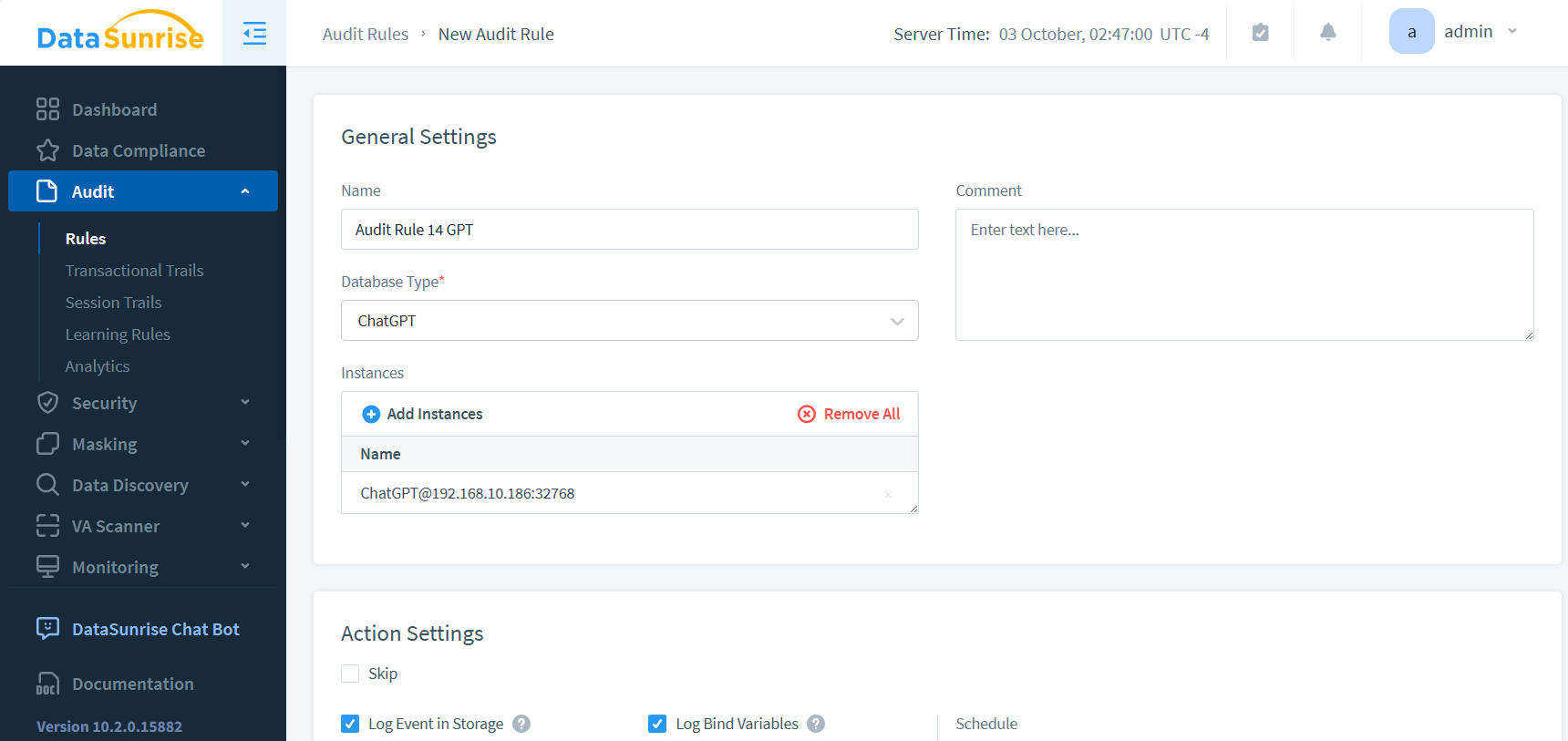

Setting up audit rules is straightforward. In this case, we select the relevant instance, which is not a database but rather ChatGPT, a web application. This process demonstrates the flexibility of the auditing system in handling various types of applications.

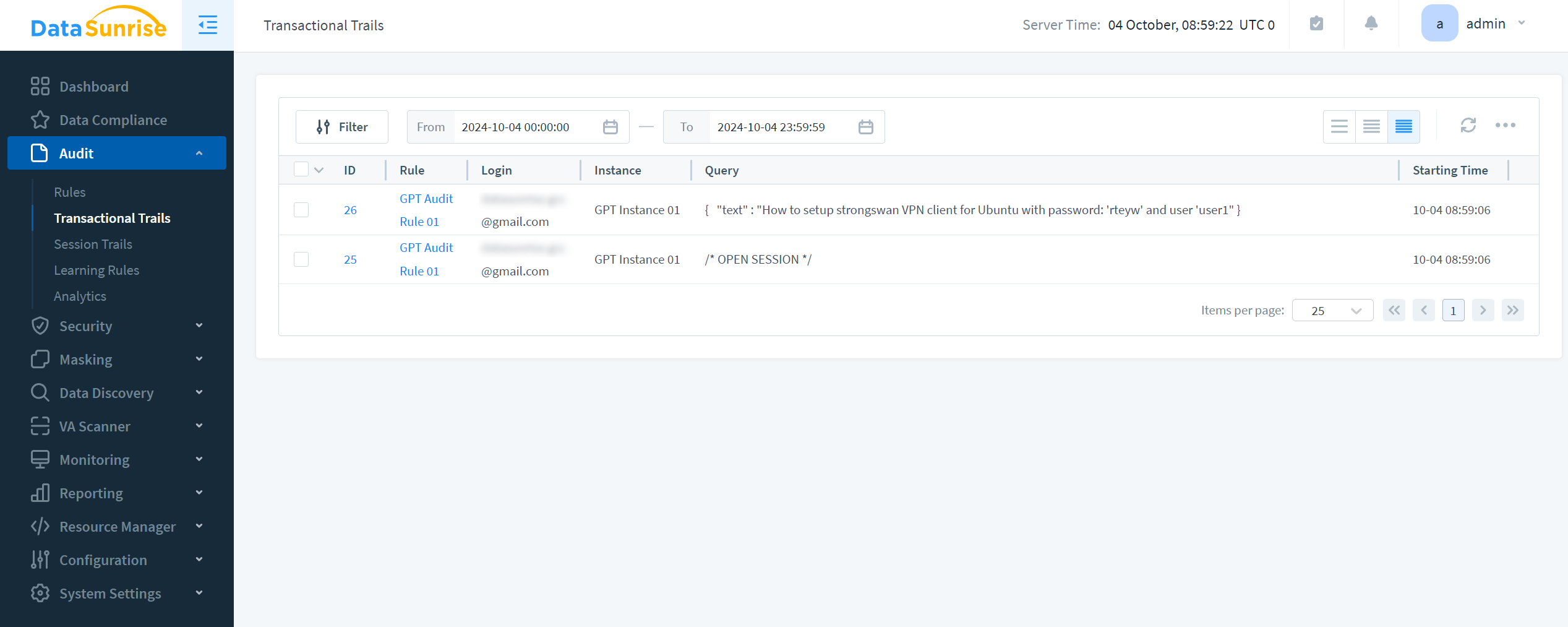

The audit results and the corresponding GPT prompt are as follows:

Conclusion: Embracing AI with Confidence

Generative AI is becoming a part of our daily lives and businesses. As it evolves, we need strong auditing and security measures. These measures are essential for safety.

By using clear strategies and advanced tools from DataSunrise, organizations can use AI effectively. They can also keep data safe and protect privacy.

The future of AI is bright, but it must be built on a foundation of trust and security. By using proper auditing and privacy measures, we can fully unlock the potential of generative AI. This will help protect the rights and information of both individuals and organizations.

DataSunrise: Your Partner in AI Security

DataSunrise leads in AI security. It provides not only audit tools but also a full set of features. These tools protect your data on different platforms and databases.

Our solution adjusts to the specific challenges of generative AI. This helps your organization stay ahead of possible threats.

We invite you to explore how DataSunrise can enhance your AI security posture. Visit the DataSunrise website to schedule a demo. Learn how our advanced solutions can help you manage AI governance and data protection.