How to Audit Apache Hive

Introduction

Apache Hive is widely used by many organizations to process and analyze vast amounts of structured data stored in Hadoop. As the volume of sensitive data being processed through Hive increases, implementing effective audit mechanisms becomes essential not only for security but also for regulatory compliance.

This guide will walk you through the process of setting up and configuring audit capabilities for Apache Hive, from native auditing features to enhanced solutions with DataSunrise, ensuring you have the visibility needed to monitor data access, detect unauthorized activities, and maintain compliance.

How to Audit Apache Hive Using Native Capabilities

Apache Hive offers several built-in mechanisms for auditing that one configure to track user activities and operations performed on data. Let's explore how to set up these native auditing capabilities:

Step 1: Enable SQL Standards Based Authorization

The SQL Standards Based Authorization in Hive provides a role-based access control model that includes basic auditing capabilities. This model records operations and privilege changes performed by users.

To enable SQL Standards Based Authorization, modify your hive-site.xml configuration file:

<property>

<name>hive.security.authorization.enabled</name>

<value>true</value>

</property>

<property>

<name>hive.security.authorization.manager</name>

<value>org.apache.hadoop.hive.ql.security.authorization.plugin.sqlstd.SQLStdHiveAuthorizerFactory</value>

</property>

<property>

<name>hive.security.authenticator.manager</name>

<value>org.apache.hadoop.hive.ql.security.SessionStateUserAuthenticator</value>

</property>

<property>

<name>hive.server2.enable.doAs</name>

<value>false</value>

</property>

After making these changes, restart the Hive services to apply the configuration.

Step 2: Configure Logging Framework

Apache Hive uses Log4j for logging events, which can be configured to capture audit information. To enhance audit logging, modify the hive-log4j2.properties file:

# Hive Audit Logging

appender.AUDIT.type = RollingFile

appender.AUDIT.name = AUDIT

appender.AUDIT.fileName = ${sys:hive.log.dir}/${sys:hive.log.file}.audit

appender.AUDIT.filePattern = ${sys:hive.log.dir}/${sys:hive.log.file}.audit.%d{yyyy-MM-dd}

appender.AUDIT.layout.type = PatternLayout

appender.AUDIT.layout.pattern = %d{ISO8601} %p %c: %m%n

appender.AUDIT.policies.type = Policies

appender.AUDIT.policies.time.type = TimeBasedTriggeringPolicy

appender.AUDIT.policies.time.interval = 1

appender.AUDIT.policies.time.modulate = true

appender.AUDIT.strategy.type = DefaultRolloverStrategy

appender.AUDIT.strategy.max = 30

# Audit Logger

logger.audit.name = org.apache.hadoop.hive.ql.audit

logger.audit.level = INFO

logger.audit.additivity = false

logger.audit.appenderRef.audit.ref = AUDIT

These settings create a dedicated audit log file that captures all audit events in a structured format.

Step 3: Enable HDFS Audit Logs

Since Hive operations ultimately involve HDFS operations, enabling HDFS audit logs provides an additional layer of auditing. Modify the hdfs-site.xml file:

<property>

<name>hadoop.security.authorization</name>

<value>true</value>

</property>

<property>

<name>dfs.namenode.audit.log.async</name>

<value>true</value>

</property>

<property>

<name>dfs.namenode.audit.log.debug.cmdlist</name>

<value>open,create,delete,append,rename</value>

</property>

Restart the HDFS services to apply these changes.

Step 4: Test Audit Logging

To verify that auditing is working correctly, perform various Hive operations and check the audit logs:

-- Create a test database

CREATE DATABASE audit_test;

-- Create a table

USE audit_test;

CREATE TABLE employee (

id INT,

name STRING,

salary FLOAT

);

-- Insert data

INSERT INTO employee VALUES (1, 'John Doe', 75000.00);

INSERT INTO employee VALUES (2, 'Jane Smith', 85000.00);

-- Query data

SELECT * FROM employee WHERE salary > 80000;

-- Update data

UPDATE employee SET salary = 90000.00 WHERE id = 1;

-- Drop table

DROP TABLE employee;

After executing these operations, check the audit logs to ensure they're recording all activities:

cat ${HIVE_LOG_DIR}/hive.log.audit

Step 5: Integrate with Apache Ranger (Optional)

For more comprehensive auditing capabilities, integrate Apache Hive with Apache Ranger. Ranger provides centralized security administration and detailed audit logs for Hadoop components.

Install Apache Ranger using the official installation guide.

Configure the Ranger Hive plugin by modifying

hive-site.xml:

<property>

<name>hive.security.authorization.enabled</name>

<value>true</value>

</property>

<property>

<name>hive.security.authorization.manager</name>

<value>org.apache.ranger.authorization.hive.authorizer.RangerHiveAuthorizerFactory</value>

</property>

- Configure the Ranger audit settings in

ranger-hive-audit.xml:

<property>

<name>xasecure.audit.is.enabled</name>

<value>true</value>

</property>

<property>

<name>xasecure.audit.destination.db</name>

<value>true</value>

</property>

<property>

<name>xasecure.audit.destination.db.jdbc.driver</name>

<value>org.postgresql.Driver</value>

</property>

<property>

<name>xasecure.audit.destination.db.jdbc.url</name>

<value>jdbc:postgresql://ranger-db:5432/ranger</value>

</property>

Limitations of Native Auditing

While these native auditing mechanisms provide basic functionality, they have several limitations:

- Fragmented Audit Data: Audit information speads across multiple log files and systems.

- Complex Configuration: Setting up comprehensive auditing requires configuring multiple components.

- Limited Monitoring Tools: Native audit logs lack user-friendly interfaces for analysis.

- Manual Compliance Reporting: Generating compliance reports requires custom scripts or manual extraction.

- Resource Intensive: Extensive auditing can impact performance in high-volume environments.

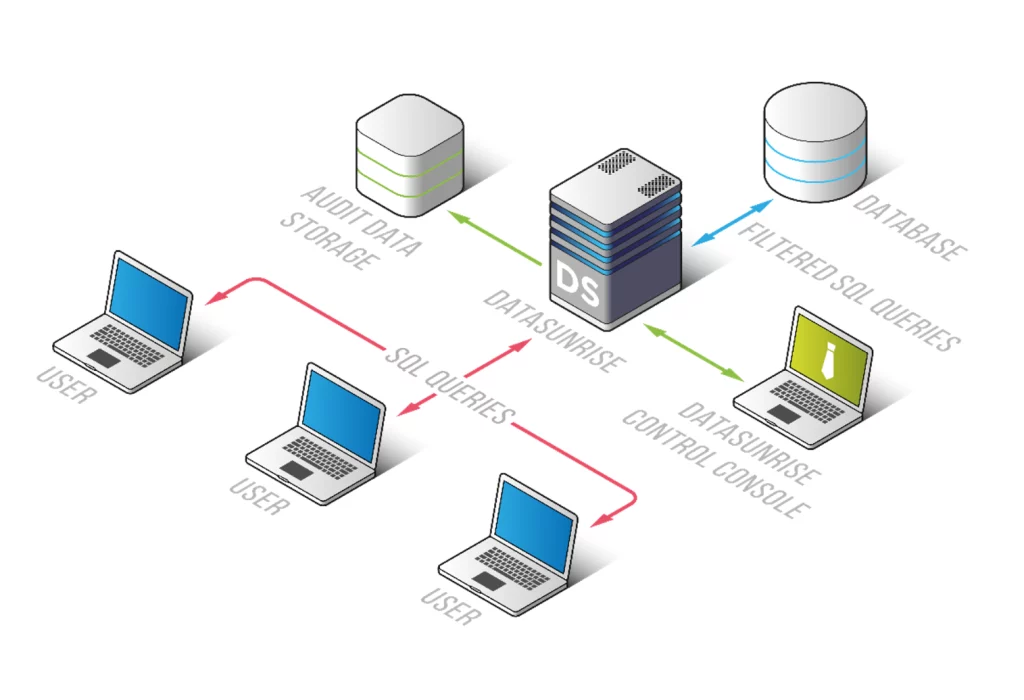

How to Audit Apache Hive Efficiently with DataSunrise

For organizations that require more comprehensive auditing solutions, DataSunrise provides advanced capabilities that address the limitations of native Hive auditing. Let's explore how to set up and configure DataSunrise for auditing Apache Hive:

Step 1: Deploy DataSunrise

Begin by deploying DataSunrise in your environment. DataSunrise offers flexible deployment options including on-premises, cloud, and hybrid configurations.

Step 2: Connect to Apache Hive

Once DataSunrise is deployed, connect it to your Apache Hive environment:

- Navigate to the DataSunrise management console.

- Go to "Databases" and select "Add Database."

- Select "Apache Hive" as the database type.

- Enter the connection details for your Hive instance, including host, port, and authentication credentials.

- Test the connection to ensure it's properly configured.

Step 3: Configure Audit Rules

Create audit rules to define what activities should be monitored:

- Go to "Rules" and select "Add Rule."

- Choose "Audit" as the rule type.

- Configure the rule parameters, including:

- Rule name and description

- Target objects (databases, tables, views)

- Users and roles to monitor

- Types of operations to audit (SELECT, INSERT, UPDATE, DELETE, etc.)

- Time-based conditions (if needed)

- Save and activate the rule.

Step 4: Test and Validate Auditing

Perform various Hive operations to validate that DataSunrise is properly auditing activities:

- Execute the same test queries used earlier to validate native auditing.

- Navigate to the "Audit Log" section in DataSunrise to view the captured audit events.

- Verify that all operations are being properly recorded with detailed information including:

- User identity

- Timestamp

- SQL query

- Operation type

- Affected objects

- Source IP address

Conclusion

Effective auditing of Apache Hive is essential for maintaining security, ensuring compliance, and gaining visibility into data access patterns. While Hive's native auditing capabilities provide basic functionality, organizations with advanced requirements benefit from comprehensive solutions like DataSunrise.

DataSunrise enhances Apache Hive auditing with centralized management, detailed audit trails, real-time alerting, and automated compliance reporting. By implementing a robust auditing solution, organizations can protect their sensitive data, maintain regulatory compliance, and quickly respond to security incidents.

Ready to enhance your Apache Hive auditing capabilities? Schedule a demo to see how DataSunrise can help you implement comprehensive auditing for your Hive environment.