Production Testing

Introduction

In software development, it’s crucial to make sure that apps are good and reliable before giving them to users. This is where production testing comes into play. It is a crucial process that evaluates how software functions in a real environment. People also refer to it as testing in production or production verification testing.

This process checks the behavior and performance of the software. This article will discuss production testing basics. We will discuss how we utilize production data and the associated risks. Additionally, it will highlight the distinctions between production data and test data.

What is Production Testing?

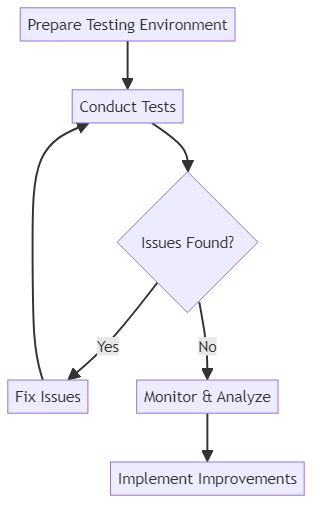

Production testing is the practice of evaluating a software application’s functionality, performance, and user experience in a production-like environment. It involves running tests and monitoring the application’s behavior under real-world conditions, with actual user traffic and data. The main goal of testing is to find and fix any problems that may not appear during controlled testing.

Testing encompasses various activities, such as:

- Functional testing: Verifying that the application’s features and functionalities work as expected in the production environment.

- Performance testing: Assessing the application’s responsiveness, scalability, and resource utilization under real-world load.

- User experience testing: Evaluating the application’s usability, accessibility, and overall user satisfaction.

- Security testing: Identifying and mitigating potential security vulnerabilities and ensuring data privacy.

Teams test the product to see how users use it and fix issues before they impact user experience.

Using Production Data for Testing

One approach to testing is leveraging actual production data for testing purposes. This involves replicating or mirroring the production environment, including the database and its contents, in a separate testing environment. Testers can use real production data to find problems that may not appear with fake test data.

However, using production data for testing comes with its own set of challenges and considerations:

- Data sensitivity: Production data often contains sensitive information, such as personally identifiable information (PII) or financial data. Stringent security measures must be in place to protect this data during testing.

- Data volume: Creating a testing environment with identical data as production databases can be difficult and time-consuming because of their large size. This difficulty arises because of the sheer size of the production databases. As a result, it becomes a challenging task to replicate the production data in a testing environment. Managing the testing environment with an equivalent amount of data also adds to the complexity and time required.

- Data consistency: Ensuring data consistency between the production and testing environments is crucial to obtain accurate test results. Any discrepancies can lead to false positives or false negatives.

To mitigate these challenges, organizations often employ data masking techniques to obfuscate sensitive information while preserving the data’s structure and distribution. Additionally, you can use data subsetting or sampling to create smaller, representative datasets for testing purposes.

Risks of Using Production Data for Testing

Using real data for testing has benefits such as realism and coverage. However, it also carries risks that require careful control.

- Data breach: Using production data for testing without proper security measures can make it easier for unauthorized users to access or breach data. Testers should have access to sensitive information that needs restriction.

- Compliance violations: Many industries have strict regulations governing the handling of sensitive data, such as GDPR or HIPAA. Using production data for testing without proper safeguards can lead to compliance violations and legal consequences.

- Performance impact: Testing on a live system with real data can affect its performance and availability. Ensuring that testing activities do not disrupt the production services is essential.

To mitigate these risks, organizations should establish clear policies and procedures for production testing, including access controls, data encryption, and monitoring mechanisms. Organizations should conduct regular audits and security assessments to identify and address potential vulnerabilities.

Production Data vs. Test Data

While production data offers realism, it is not always feasible or desirable to use it for every testing scenario. On the other hand, developers specifically design and generate test data for testing purposes. It aims to cover various test cases and boundary conditions without the complexities and risks associated with production data.

Test data has several advantages over production data:

- Control and flexibility: Test data can be changed to fit specific testing needs, so testers can experiment with various scenarios and unique cases. They have full control over the data’s characteristics and can easily modify it as needed.

- Isolation: Test data is kept in a separate location from the live systems to prevent any issues from affecting them. You can run tests without affecting real users or data.

- Efficiency: Test data is usually smaller than production data, so it’s quicker to prepare and run tests with it. This enables more frequent and iterative testing cycles.

However, test data also has its limitations. Sometimes, it may not show all the details and differences in real data, which could cause problems or mistakes. Striking the right balance between test data and production data is crucial for comprehensive testing coverage.

Feature Flags in Production Testing

Feature flags are a powerful technique used in testing to control the rollout of new features or changes in a live environment. They allow developers to enable or disable specific functionality without deploying new code, which is particularly useful for:

- Gradual rollouts: Introducing new features to a subset of users to gather feedback and monitor performance.

- A/B testing: Comparing different versions of a feature to determine which performs better.

- Quick disabling: Turning off problematic features without a full rollback.

The following Python code demonstrates a simple implementation of feature flags:

import random

class FeatureFlags:

def __init__(self):

self.flags = {

"new_ui": False,

"improved_algorithm": False,

"beta_feature": False

}

def is_enabled(self, feature):

return self.flags.get(feature, False)

def enable(self, feature):

if feature in self.flags:

self.flags[feature] = True

def disable(self, feature):

if feature in self.flags:

self.flags[feature] = False

def main():

feature_flags = FeatureFlags()

# Simulate gradual rollout of a new feature

if random.random() < 0.2: # 20% of users get the new UI

feature_flags.enable("new_ui")

# Use feature flags in the application

if feature_flags.is_enabled("new_ui"):

print("Displaying new UI")

else:

print("Displaying old UI")

if feature_flags.is_enabled("improved_algorithm"):

print("Using improved algorithm")

else:

print("Using standard algorithm")

if feature_flags.is_enabled("beta_feature"):

print("Beta feature is active")

if __name__ == "__main__":

main()

In this example:

- The `FeatureFlags` class manages the state of different features.

- The `main()` function demonstrates how to use these flags to control application behavior.

- We simulate a gradual rollout by enabling the "new_ui" feature for 20% of users.

- >The application's behavior changes based on the state of each feature flag.

This approach allows for safe testing of new features in a production environment, aligning with the best practices discussed in the article on testing.

Best Practices for Production Testing

To ensure effective and reliable testing, consider the following best practices:

- Establish a robust testing strategy: Define clear objectives, test cases, and success criteria for production testing. Identify critical user flows and prioritize testing efforts accordingly.

- Implement monitoring and alerting: Create systems to watch how well apps work, how often they mess up, and how users act in real use. Configure alerts to promptly notify the team of any anomalies or issues.

- Conduct regular tests: Set up regular tests, like daily or weekly, to check how well the app works and find any issues.

- Use feature flags: Implement feature flags to control the rollout of new features or changes in the production environment. This allows for gradual deployment and the ability to quickly disable problematic features if needed.

- Collaborate with operations teams: Foster close collaboration between development and operations teams to ensure smooth testing and efficient issue resolution. Establish clear communication channels and escalation paths.

- Maintain comprehensive documentation: Document the production testing process, including test cases, data preparation steps, and expected outcomes. This documentation serves as a reference for the team and facilitates knowledge sharing.

Organizations can improve their testing process by following these tips. This can help reduce risks and ensure top-notch software for users.

Conclusion

Production testing is important in software development. It helps organizations check how their applications work in real-life situations. By leveraging production data and conducting thorough tests, development teams can identify and address issues proactively, ensuring a smooth user experience.

However, using production data for testing comes with challenges and risks, such as data sensitivity, compliance, and performance impact. Organizations must implement robust security measures, establish clear policies, and strike a balance between production data and test data to ensure comprehensive testing coverage.

Teams can make better decisions and improve software quality by understanding testing basics, best practices, and risk mitigation. Understanding these concepts can help teams make informed choices and ensure the delivery of high-quality software. By implementing these strategies, teams can reduce the likelihood of errors and improve overall performance.