pgvector: Protecting Data from Exposure via Vector Embeddings

The Hidden Risk of Vector Embeddings

Vector embeddings power GenAI applications, enabling semantic search, recommendation systems, and AI-driven insights. In PostgreSQL, the pgvector extension makes it possible to store and query high-dimensional embeddings efficiently, enhancing AI-driven applications with fast similarity search. But despite being just numbers post-embedding, they can still leak sensitive data.

Can Vector Embeddings Actually Expose Sensitive Information?

Vector embeddings function like coordinates in a high-dimensional space—they don’t directly contain sensitive data, but they can still be exploited to reconstruct patterns. Protecting sensitive information means controlling what goes into embeddings and monitoring how they are queried

If embeddings are generated from raw text containing personally identifiable information (PII) like names, SSNs, or addresses, the model may encode patterns that indirectly expose this information. Attackers can exploit nearest-neighbor searches to reconstruct sensitive data, leading to compliance violations and security threats.

So, can vector embeddings actually expose sensitive information? Yes—sensitive data can be exposed through embeddings under certain circumstances. And, While embeddings don’t store raw data, the way they encode relationships between data points means that sensitive information could be inferred when queried cleverly. Depending on how the embeddings are generated and what information is used to create them, here’s how it can happen:

🔍 How Sensitive Data Can Be exposed into Embeddings

1. Direct Encoding

- If embeddings are created from raw text containing sensitive information (e.g., SSNs, names, or addresses), the model may encode patterns that indirectly reveal them.

➡️Example: If SSN: 123-45-6789 is part of an employee's profile used for embedding generation, a model may generate embeddings that, when queried in specific ways, could return vectors that resemble or correlate with sensitive data patterns

2. Implicit Data Correlation

- If embeddings are trained on structured data (e.g., employees' roles, salaries, and departments), patterns in this data might correlate with PII.

➡️Example: If an employee's SSN is used in vector training along with salary and department, an AI system might reveal salary details when searching for similar embeddings.

3. Memorization by AI Models

- If an AI model trained on sensitive data generates embeddings, it may memorize and regurgitate specific details when prompted in a clever way.

➡️Example: If embeddings store employee names and roles, a model might retrieve similar vectors containing personal info when asked about "employees in finance earning over $100K."

4. Reconstruction Risks

- In some cases, embeddings can be reverse-engineered using adversarial attacks, reconstructing parts of the original data.

➡️Example: If an attacker queries the system with specific input patterns, they might extract meaningful data from embeddings.

🔓 How Sensitive Data Can Be Exposed from Embeddings

Attackers or unintended queries may expose PII through:

- Nearest-Neighbor Searches – Finding embeddings closest to sensitive data patterns.

- Vector Clustering – Grouping similar embeddings to infer related personal details.

- Prompt Injection – Tricking the system into revealing stored sensitive content.

- Adversarial Attacks – Exploiting model weaknesses to reconstruct original input.

Summary

Yes, sensitive data can leak into embeddings if generated without proper safeguards. If an AI system is using embeddings that were created from raw sensitive data, it may output similar information when queried cleverly.

Best practice: Never embed raw sensitive fields, and always sanitize data before vectorization.

Techniques to Prevent PII Leakage from Vector Embeddings

1. Data Sanitization Before Embedding Generation

Before converting data into vector embeddings, strip out or transform sensitive information so it never enters the vector space.

Remove PII Fields – Avoid embedding raw data like SSNs, names, and addresses.

Generalize Data – Instead of storing exact salaries, categorize them into ranges.

Tokenization – Replace sensitive data with non-reversible identifiers.

Example: Instead of embedding:

"John Doe, SSN: 123-45-6789, earns $120,000"

Store: "Employee X, earns $100K-$150K"

This ensures PII never enters the vector store in the first place.

2. Sensitive Data Masking in Queries and Responses

Even if raw PII was embedded or embeddings encode patterns related to PII, you can still mask or obfuscate sensitive data during retrieval

Dynamic Data Masking – Redact or transform sensitive output before it reaches users.

Real-time Query Filtering – Block unauthorized similarity searches on embeddings.

Access Control & Role-Based Restrictions – Limit vector search access to trusted users.

Example: If a user queries embeddings and retrieves a data chunk containing PII:

Original output: "John Doe's salary is $120,000"

Masked output: "Employee X's salary is $1XX,000"

This prevents unintended exposure of sensitive information.

Proactive vs. Reactive Approaches to Data Security for Vector Embeddings

1️⃣ Proactive Security – Applying PII Protection Pre-Embedding

This approach ensures that sensitive data never enters the vector embedding in the first place.

How?

Sanitize structured data before vectorization. ✅

Mask sensitive information before embedding. ✅

Use tokenization to replace identifiable values. ✅

Apply differential privacy techniques to introduce noise. ✅

Benefit: This approach eliminates risks at the source, making it impossible for embeddings querying to reveal PII.

2️⃣ Reactive Security – Auditing and Masking Post-Embedding

This approach assumes embeddings already contain references to sensitive information and focuses on detecting and masking PII during retrieval.

How?

Find sensitive information used in vector embeddings creation. ✅

Apply real-time masking before displaying retrieved data. ✅

Restrict unauthorized queries from accessing sensitive embeddings. ✅

Monitor vector similarity queries to detect anomalous access patterns. ✅

Benefit: Even if sensitive information already exists in embeddings, this method ensures it is never exposed during retrieval.

🎯 The Best Security Strategy? – Use BOTH

The strongest security comes from combining both methods:

- Proactive sanitization prevents embedding sensitive data.

- Reactive monitoring ensures existing embeddings don't leak PII.

How DataSunrise Secures Data behind Vector Embedding

DataSunrise provides a comprehensive security solution for protecting data referenced by pgvector embeddings before and after they are created.

🛡️ Proactive Protection: Securing Source Data Pre-Embedding

For organizations dealing with vast amounts of structured and unstructured data, DataSunrise helps by:

- Detecting PII before it becomes part of an embedding.

- Masking sensitive data before vectorization.

- Using data anonymization techniques to strip out specific personal details

Example: Before embedding customer profiles, DataSunrise can scan the data storage for sensitive data, remove SSNs, anonymize addresses, and generalize financial data, ensuring the vectorized representation contains no private details.

🛡️ Reactive Protection: Securing Source Data with Existing Embeddings and AI Applications

If an AI application is already running with embeddings containing references to sensitive data, DataSunrise offers:

- Sensitive data discovery for data used in embedding creation.

- Dynamic masking of sensitive query results.

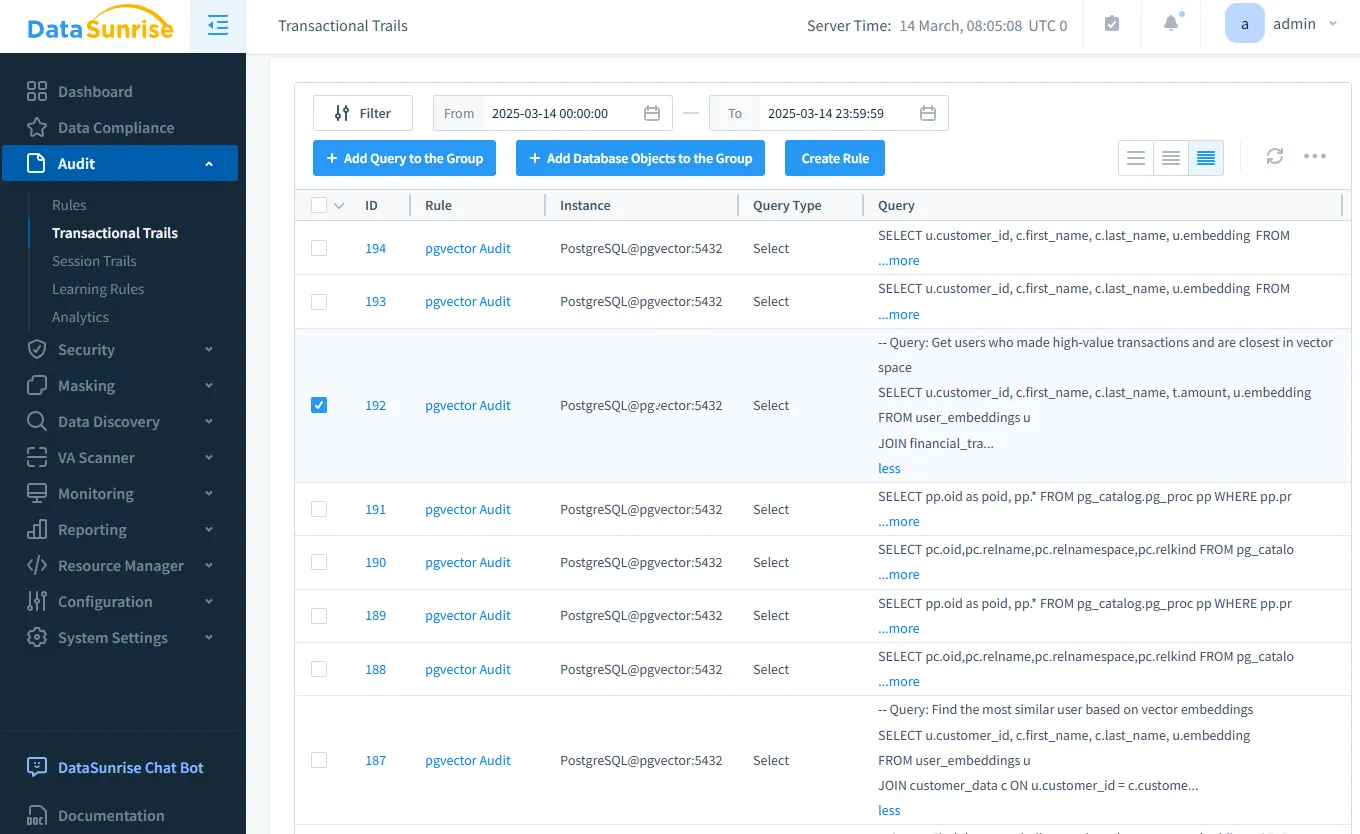

- Real-time auditing to detect unauthorized vector similarity searches.

Example: If an attacker tries to query embeddings for data that might contain PII, DataSunrise tracks and monitors such attempts and masks sensitive information before it's exposed.

The table below illustrates DataSunrise's comprehensive approach to securing vector embeddings, addressing both prevention and detection of sensitive data exposure:

| Feature | Proactive Protection | Reactive Protection |

|---|---|---|

| Data Discovery | Identifies sensitive data before embedding | Analyzes embedding sources to detect potential PII exposure |

| Data Audit | Logs embedding generation | Detects suspicious queries |

| Data Security | Prevents PII in embeddings | Blocks unauthorized vector searches |

| Data Masking | Hides sensitive data before embedding | Masks sensitive info on retrieval |

Conclusion: A Dual-Layered Approach to Security

Vector embeddings in pgvector are powerful, but they can expose sensitive data if not handled correctly. The best approach is to combine proactive and reactive security techniques to minimize risks.

🔹 Before embeddings are created – Sanitize, mask, and control data access.

🔹 After embeddings exist – Audit, monitor, and mask PII in GenAI responses.

To secure vector embeddings in PostgreSQL with pgvector, organizations should:

- ✅ Use proactive measures to prevent PII from entering embeddings.

- ✅ Implement reactive security to monitor and mask retrieved information.

- 🛡️ Leverage DataSunrise to detect, protect, and prevent sensitive data exposure at every stage.

DataSunrise enables both strategies, ensuring AI-powered applications stay secure and compliant. Whether you're building a new AI system or securing an existing one, DataSunrise provides end-to-end protection for sensitive vectorized data.

By integrating DataSunrise Security Features, businesses can use their data for vector embeddings without risking data privacy violations.

Need to Secure Your Vector Embeddings Data? Schedule DataSunrise Demo today to safeguard your GenAI applications!