What is Dynamic Data?

In today’s fast-paced digital world which produces 5 exabytes of data everyday, data is the lifeblood of businesses and organizations. But not all data is created equal.

Dynamic data is essential for real-time decision-making in industries like finance and IoT, but managing it comes with challenges like ensuring accuracy and security. In this article, we’ll explore how to effectively handle this constantly changing data.Some data remains constant, while other data changes rapidly. This ever-changing information is what we call dynamic data. In this article, we’ll dive deep into the world of constantly changing data, exploring its nature, types, and the challenges it presents in data management.

The Nature of Dynamic Data

This type of data is an information that changes frequently, often in real-time. Unlike static data, which remains constant over time, it is fluid and responsive to external factors. This characteristic makes it both valuable and challenging to manage.

Why This Data Matters

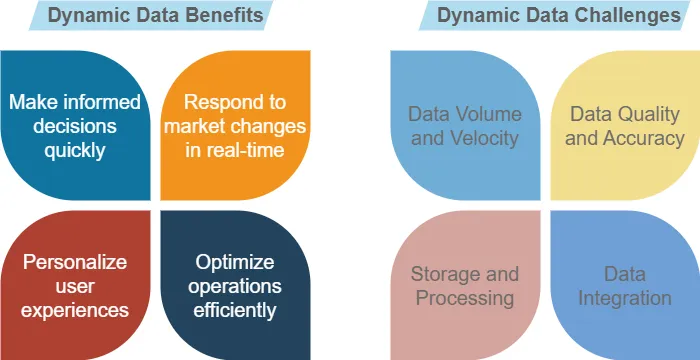

In an era where information is power, just in time received data provides up-to-the-minute insights. It allows businesses to benefit but it also makes the business to face some challenges too. See some of them in the picture below.

For instance, a weather app relies on dynamic data to provide accurate forecasts. As conditions change, so does the data, ensuring users always have the most current information.

Types of Dynamic Data

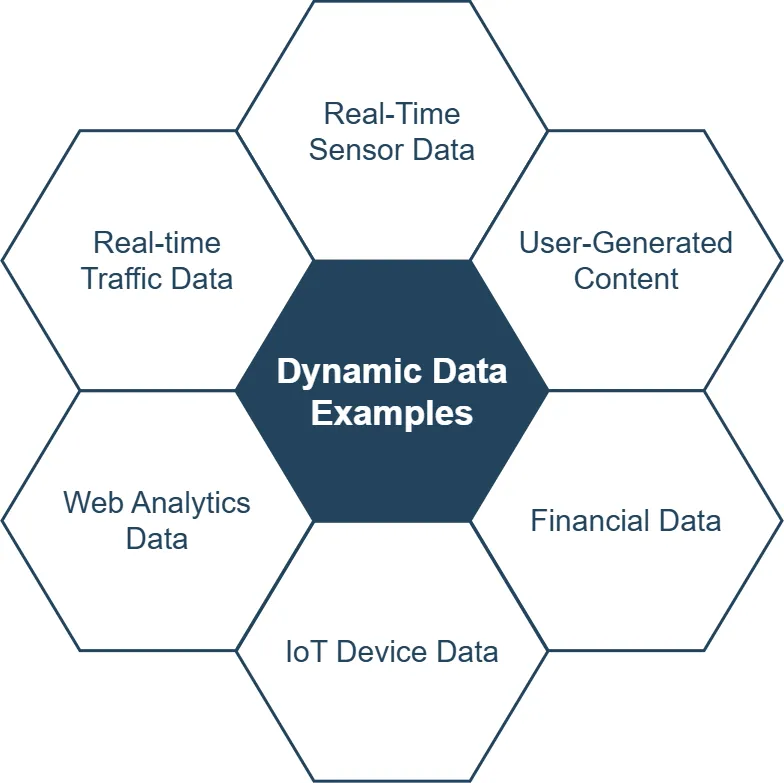

It comes in various forms, each with its own characteristics and applications. Let’s explore some common types:

1. Real-Time Sensor Data

Sensors continuously collect data from the physical world. This includes:

- Temperature readings

- Humidity levels

- Motion detection

- GPS coordinates

For example, smart home devices use sensor data to adjust heating and cooling systems automatically.

2. User-Generated Content

Social media platforms are a prime example of dynamic data in action. Users constantly create new posts, comments, and reactions, generating a steady stream of dynamic content.

3. Financial Data

Stock prices, exchange rates, and cryptocurrency values fluctuate constantly. Financial institutions rely on this dynamic data for trading and investment decisions.

4. IoT Device Data

The Internet of Things (IoT) generates vast amounts of data continuously. Connected devices continuously transmit information about their status, usage, and environment.

5. Web Analytics Data

Websites and apps collect real-time data on user behavior, including:

- Page views

- Click-through rates

- Session duration

- Conversion rates

This type of data helps businesses optimize their online presence and marketing strategies.

Challenges in Managing Unstable Data

While this type of data offers numerous benefits, it also presents unique challenges for data management processes.

1. Data Volume and Velocity

The sheer amount of data generated can be overwhelming. The data collection in this case is complex too. Organizations must have robust systems in place to handle high-velocity data streams.

2. Data Quality and Accuracy

With rapidly changing data, ensuring accuracy becomes more challenging. Outdated or incorrect information can lead to poor decision-making. Always try to improve data quality before gathering some insights.

3. Storage and Processing

In this case, data requires flexible storage solutions and efficient processing capabilities to handle real-time updates and queries.

4. Data Integration

Combining dynamic data from multiple sources can be complex. Ensuring consistency and coherence across different data streams is crucial.

5. Security and Privacy

Protecting dynamic data presents unique security challenges. As data changes rapidly, maintaining proper access controls and encryption becomes more complex.

Optimal Data Processing for Changing Data

To harness the power of data which is constantly changing, organizations need to implement optimal data processing strategies.

Traditional batch processing methods often fall short when dealing with changing data structure. Real-time processing techniques, such as stream processing, allow for immediate data analysis and action.

Example:

from pyspark.streaming import StreamingContext

# Create a StreamingContext with a 1-second batch interval

ssc = StreamingContext(sc, 1)

# Create a DStream that connects to a data source

lines = ssc.socketTextStream("localhost", 9999)

# Process the stream

word_counts = lines.flatMap(lambda line: line.split(" ")) \

.map(lambda word: (word, 1)) \

.reduceByKey(lambda a, b: a + b)

# Print the results

word_counts.pprint()

# Start the computation

ssc.start()

ssc.awaitTermination()This PySpark code demonstrates real-time processing of a text stream, counting words as they arrive.

Code explanation

PySpark Streaming code provided above does following:

- First, it imports the StreamingContext from PySpark’s streaming module.

- It creates a StreamingContext (ssc) with a 1-second batch interval. This means the streaming computation will be divided into 1-second batches.

- It sets up a DStream (Discretized Stream) that connects to a data source. In this case, it’s reading from a socket on localhost at port 9999. This could be any streaming data source.

- The code then processes the stream:

- It splits each line into words

- Maps each word to a key-value pair (word, 1)

- Reduces by key, which effectively counts the occurrences of each word

- It prints the results of the word count.

- Finally, it starts the computation and waits for it to terminate.

This code is essentially setting up a real-time word count system. It would continuously read text data from the specified socket, count the words in real-time (updating every second), and print the results.

It’s a simple but powerful example of how PySpark Streaming can be used for real-time data processing. In a real-world scenario, you might replace the socket source with a more robust data stream (like Kafka) and do more complex processing or store the results in a database instead of just printing them.

Scalable Infrastructure

To handle the volume and velocity of dynamic data, scalable infrastructure is essential. Cloud-based solutions and distributed systems offer the flexibility needed to adapt to changing data loads.

Data Quality Monitoring

Implementing automated data quality checks helps maintain the accuracy and reliability of dynamic data. This includes:

- Validating data formats

- Checking for outliers

- Ensuring data completeness

Dynamic Data Security: Protecting Fluid Information

Securing this data requires a proactive and adaptive approach. Here are some key strategies:

1. Encryption in Transit and at Rest

Ensure that dynamic data is encrypted both when it’s moving between systems and when it’s stored.

2. Real-Time Access Control

Implement dynamic access control mechanisms that can adapt to changing data and user contexts.

3. Continuous Monitoring

Use real-time monitoring tools to detect and respond to security threats as they emerge.

4. Data Anonymization

When dealing with sensitive dynamic data, consider anonymization techniques to protect individual privacy while preserving data utility.

Example:

import pandas as pd

from faker import Faker

# Load dynamic data

df = pd.read_csv('user_data.csv')

# Initialize Faker

fake = Faker()

# Anonymize sensitive columns

df['name'] = df['name'].apply(lambda x: fake.name())

df['email'] = df['email'].apply(lambda x: fake.email())

# Save anonymized data

df.to_csv('anonymized_user_data.csv', index=False)This Python script demonstrates a simple data anonymization process for dynamic user data.

The Future of Data Sciense

As technology continues to evolve, the importance and prevalence of dynamic data will only grow. Emerging trends include:

- Edge Computing: Processing this type of data closer to its source for faster insights

- AI-Driven Analytics: Using machine learning to extract deeper insights from dynamic data streams

- Blockchain for Data Integrity: Ensuring the authenticity and traceability of dynamic data

Conclusion: Embracing the Dynamic Data Revolution

Dynamic data is transforming how we understand and interact with the world around us. From real-time business insights to personalized user experiences, its impact is far-reaching. While managing dynamic data presents challenges, the benefits far outweigh the difficulties.

By implementing robust data management processes, optimal processing strategies, and strong security measures, organizations can harness the full potential of dynamic data. Using dynamic data effectively will give a big advantage in our data-driven world.

For businesses looking to secure and manage their data effectively, DataSunrise offers user-friendly and flexible tools for database security and compliance on-premises and in the cloud. Visit our website at DataSunrise for an online demo and discover how we can help you protect your valuable data assets.